Humans in the Loop

A story about why AI governance must belong to all of us.

The room was too small for the number of people squeezed into it, and the fluorescent lights flickered with the kind of uncertainty you never want in an AI lab. It was the weekly “Model Review Session,” the one meeting no one really wanted to attend — mostly because it forced everyone to admit the system wasn’t as finished as they hoped.

Amir, the lead engineer, sat at the head of the table with a laptop full of graphs and confidence intervals. To him, the model was solid. Good accuracy. Low drift. Clean logs. He had stayed up half the night tightening a few loose metrics to prove it.

“You’re going to love these numbers,” he began.

But halfway across the table, Leila, the sociologist, shifted in her chair. She’d been interviewing real users all week — some enthusiastic, some frustrated, some quietly anxious — and her notes told a very different story.

“I’m sure the model is performing great,” she said gently, “but your ‘high-confidence category’ includes all the people who refused to answer the question because they didn’t trust the interface.”

Amir stared. “…They refused?”

“Yes,” she replied. “The button that says ‘Continue’ feels like a trap to them. They think the AI will judge them.”

He blinked in disbelief. The button was the problem?

From the corner, Tomoko, the linguist, raised her hand. “And in the Japanese version, ‘Continue’ translates closer to ‘Submit for evaluation.’ It changes the entire emotional meaning.”

The engineer sank into his chair.

Before he could respond, Maya, the human-factors specialist, tapped the table. “Our eye-tracking tests showed users freeze at that screen. They’re overwhelmed. The cognitive load is too high. It’s not that they don’t understand the model — they don’t understand what we want from them.”

Behind her, the compliance officer added: “And legally, this violates transparency requirements. The choice architecture isn’t clear enough for consent.”

The room fell quiet.

Amir looked around. “So… what you’re saying is the model isn’t broken. We are.”

“No,” Leila said softly. “We’re just incomplete.”

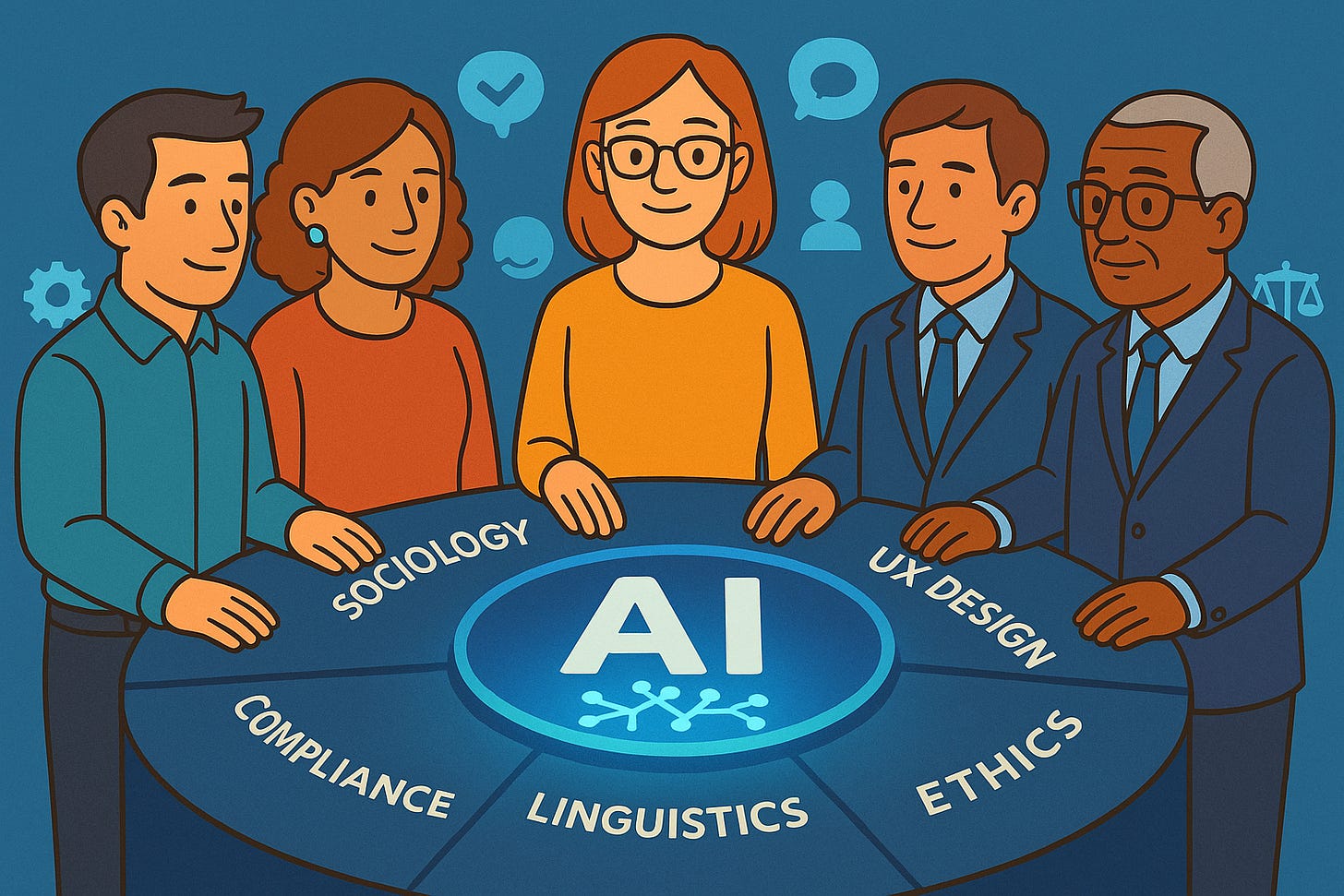

She pointed around the table:

engineers who understood model parameters

sociologists who understood social behaviors

linguists who decoded cultural nuance

UX designers who shaped the interface

ethicists who recognized how power flows

compliance analysts who guarded the rules

domain experts who lived in the real-world context

“All of us are the loop,” she said. “Humans in the loop isn’t just about oversight. It’s about perspective. No single discipline can see the whole landscape. But together… we can.”

Someone murmured: “So governance isn’t about slowing us down.”

Leila smiled. “Governance is about seeing the blind spots before they harm someone.”

The tension eased. Amir clicked to the next slide — the chart he had been proud of — and it suddenly felt small. Beautiful, but incomplete.

He closed the laptop.

“Alright,” he said. “Let’s rebuild this. All of us.”

For the first time since the project began, the meeting didn’t feel like a checkpoint.

It felt like a beginning.

Upsides of Cross-Disciplinary AI Governance

1. Fewer blind spots

Each discipline catches risks others overlook: cultural misalignment, usability failure, regulatory pitfalls, domain inaccuracies, and harm to vulnerable groups.

2. Stronger risk mitigation

Multi-lens analysis strengthens NIST AI RMF alignment and EU AI Act compliance across the lifecycle.

3. More trustworthy AI

Systems become more human-centered, transparent, accessible, and aligned with societal expectations — improving adoption.

4. Shared accountability

Governance becomes a team sport, reducing the “hero engineer” problem.

Downsides (and how to manage them)

1. More stakeholders = slower decisions

But structured RACI roles and governance workflows reduce friction.

2. Conflicting perspectives

Healthy disagreement is actually a governance asset if facilitated well.

3. Higher operational cost

Cross-disciplinary involvement requires planning — but costs are far lower than regulatory fines or public failures.

4. Coordination complexity

A governance program manager or AI risk lead can orchestrate alignment.

Cinematic Parallel: The Blind Spot Team

A helpful comparison comes from “The Martian.”

Mark Watney survives not because one expert saves him, but because:

botanists

astronauts

engineers

psychologists

mission control

international partners

worked together, each solving a piece of the puzzle the others could not.

AI governance works the same way:

No single discipline saves the mission.

The mission succeeds because every discipline supports the human at the center.

Takeaways for AI GRC Professionals

Cross-disciplinary governance isn’t optional — the NIST AI RMF, EU AI Act, and ISO 42001 implicitly require it.

Technical accuracy is only one part of safety — social context, human factors, and linguistic nuance matter just as much.

Governance is the glue, not the brake — it unifies fragmented perspectives.

Human in the loop means more than oversight — it means human context in design, evaluation, and deployment.

The future AI workforce blends disciplines — not replaces them.